Original Aims

Overview: This macroproject converged efforts in WPs 1, 2, and 3 in HumaneAI-Net for Y3. The high-level goal was to advance the theory and practice of interacting with LWMs (large whatever models, including language models, multimodal foundation models etc). The macroproject aimed to create a joint benchmark and first results to push research on capabilities of LWMs in interaction with humans.

Background: Common ground between humans and AI systems has been identified as a key challenge for the next generation of human AI collaboration. Different HumanE AI Net WPs have been running MPs on different aspects of this problem: from the HCI angle of interactive grounding in WP3, through interplay of perception and common ground in WP2 to learning with and from narratives in WP1. However, these efforts have not yet converged into something that the rest of the field could build on and that would touch on efforts at developing LWMs. The challenge is not only technical, but agreement should be established on how to define the joint problem.

Opportunity: Given that LWMs are essentially models of linguistic and other media captured of reality, they propose a paradigm-change in all three areas. LWMs also have potential to be a unifying factor for the different streams of work within the project. They offer unprecedented ability (e.g., in-context learning) for addressing issues related to grounding, shared perception, and coadaptation. Yet, their abilities have been found to be subtly unhuman-like in tasks like reasoning. The question stands what their limits are in collaborative tasks.

Objective: This macroproject aimed to create a common web-based benchmark to support multidisciplinary efforts at studying collaboration with LWMs. The benchmark was designed to involve tasks with two or more agents (human and AI) in a joint activity, with progress which requires grounding, perception, and coadaptation. The macroproject was designed to contain:

- A concrete definition of the problem implemented as an interactive game-like setup that allows running both AI and human agents

- A software API that allows training AI agents in a standard way

- Theories of interaction and empirical studies with human participants

- Interaction techniques, assistive AI and other facilitatory techniques to help humans in this task

- First results from state-of-the-art methods

- A community-crossing effort to draw attention to the benchmark

Scientific Results/Insights Summary

The macroproject produced the first version of the Collaborative AI Arena, which is available here https://rse-test1.cs.aalto.fi/.

One of the tasks in the arena was designed following the classic tangram puzzle as inspiration. The core concept was to develop a collaborative creation task that could progressively increase in challenge for both the AI and the human partners while keeping the same core interaction and problem-solving framework. The task consists of placing, in turns, a set of tangram pieces in a playfield with the goal of achieving a designated figure. The goal figure can be fixed, like in the case of the classic tangram, turning the task into a puzzle like collaborative problem-solving, or open, defined a word, a sentence, or a set of restrictions, enhancing the creative nature of the task. Between and during turns players (human and AI) can chat about the task, discussing the goal and the process to achieve it. The task can be changed also by giving different pieces to different players and even different sub-goals to create a mixed motive and hidden profile flavour to the collaboration. We believe that this consists of a good framing for challenges for LWM models as it raises needs to tackle conversational, spatial reasoning and problem-solving skills together with reaching common ground and joint creativity. In the MP we defined the first version of the framework in the form of a web game that engages two players (AI and human) with the collaborative task of creating a tangram figure defined by a word (e.g, house), whose form needs to be discussed, agreed and built jointly during the interaction. We also developed the first version of the AI model, based on ChatGPT, that plays the task with a human. It is able to talk about the task and play towards the creation of a figure that is defined by the initial word and constrained by the pieces placed on the playfield. A user study is planned for the near future to assess the collaborative capabilities of the AI model and the acceptance and subjective experience of the users interacting with it.

Emerging, context constrained non-verbal communication between human and machine (avatar, robot) partners in diverse environments is a highly unexplored field. Recent advances in LWM techniques with extensive prompt engineering and visual information can be sufficient as demonstrated at HHAI 2024

Innovation and Industry Cooperation Potential

Non-verbal communication has potential applications in both industrial and medical domains. It can complement verbal communication for disambiguation, making it history dependent and pragmatic. It may be necessary in noisy environments, e.g., in firefighting and disasters for controlling and collaborating with robots and drones. In the medical field it can be used for diagnostic and therapeutic purposes, e.g., in the case of autism and language impairments.

Informing Startups and SMEs about the Tangram Project (Start2 Group)

Examples of measures to disseminate and trigger involvement of industrial organizations included:

- Outreach from Start2 Group to startups as well as SMEs such as Parloa, Lengoo GmbH, Anticipate

- The current status of the tangram project was presented by Antti Oulasvirta. A discussion about industry relevance was held

Tangible Outcomes

Publications

Rui Prada, Astrid C Homan, Gerben A van Kleef: “Towards Sustainable Human-Agent Teams: A Framework for Understanding Human-Agent Team Dynamics” in the proceedings of AAMAS’2024 – the 23rd International Conference on Autonomous Agents and Multiagent Systems – Blue Sky Ideas Track, pp. 2696-2700, May 6–10, 2024, Auckland, New Zealand. IFAAMAS.

https://www.ifaamas.org/Proceedings/aamas2024/pdfs/p2696.pdf

Passant Elagroudy, Jie Li, Kaisa Väänänen, Paul Lukowicz, Hiroshi Ishii, Wendy E Mackay, Elizabeth F Churchill, Anicia Peters, Antti Oulasvirta, Rui Prada, Alexandra Diening, Giulia Barbareschi, Agnes Gruenerbl, Midori Kawaguchi, Abdallah El Ali, Fiona Draxler, Robin Welsch, Albrecht Schmidt: “Transforming HCI Research Cycles using Generative AI and “Large Whatever Models”(LWMs)” in the proceedings of CHI’2024 – Conference on Human Factors in Computing Systems – Extended Abstracts, pp. 1-5, May 11-16, 2024, Honolulu, Hawaiʻi. ACM.

https://abdoelali.com/pdfs/3613905.3643977.pdf

Inês Lobo, Janin Koch, Jennifer Renoux, Inês Batina, Rui Prada: “When Should I Lead or Follow: Understanding Initiative Levels in Human-AI Collaborative Gameplay” in proceedings of DIS’2024 – ACM Designing Interactive Systems Conference, pp 2037-2056, July 1-5, 2024, Copenhagen, Denmark, ACM.

https://dl.acm.org/doi/pdf/10.1145/3643834.3661583

Helena Lindgren, Vera C. Kaelin, Ann-Margreth Ljusbäck, Maitreyee Tewari, Michele Persiani, and Ingeborg Nilsson. 2024. To Adapt or Not to Adapt? Older Adults Enacting Agency in Dialogues with an Unknowledgeable Digital Agent. In Proceedings of the 32nd ACM Conference on User Modeling, Adaptation and Personalization (UMAP ’24), July 1–4, 2024, Cagliari, Italy. ACM, New York, NY, USA https://doi.org/10.1145/3627043.3659562

Vera C. Kaelin, Maitreyee Tewari, Sara Benouar, and Helena Lindgren. Developing Teamwork: Transitioning between stages in human-agent collaboration. To appear in Frontiers in Computer Science

Handbook chapters

János Adrián Gulyás, Miklós Máté Badó, Kristian Fenech, András Lőrincz

3 page demo paper

Keep Gesturing: A Game for Pragmatic Communication, Gulyás et al.

HHAI 2024, pp. 463-465

Submission to CHI 2025:

János Gulyás, Miklós Máté Badó, Kinga Faragó, Kristian Fenech, and András Lőrincz

Large Language Mimes: Gesture Based Human-Machine Communication in a Bi-Directional Signaling Game

Toolsets

Collaborative AI Arena: https://humane-ai.dice.aalto.fi/

Software:

https://github.com/badomate/CollaborativeAI/tree/working_demo_gesture for web-based gestural interaction in the Collaborative AI platform. May need permission from https://github.com/AaltoRSE/CollaborativeAI/pull/5 on page 5. Default LWM is GPT4o

The specific developments of the Tangram collaborative task are available here: https://github.com/GAIPS/TangramCollaborativeAI

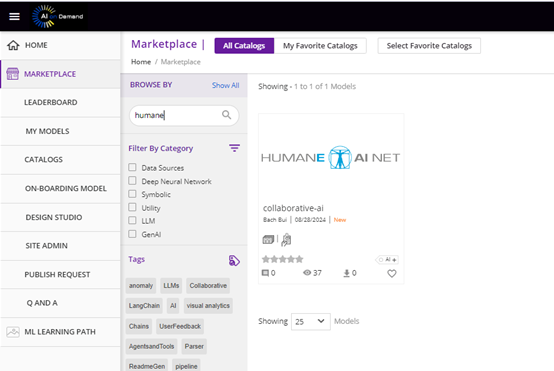

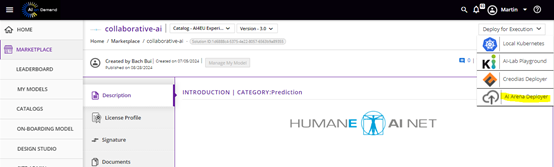

AI-Builder of the European AIoD Platform: AI-Builder that holds a catalog of AI-Models

Videos or Demos

https://youtu.be/j_bAw8e0lNU?si=STi6sbLzbpknckGG

Tangram Task demo available at:

https://gaips.github.io/TangramCollaborativeAI/

Other

Student competition, 1st Prize at ELTE

https://www.youtube.com/watch?v=WmuWaNdIpcQ (Call https://shorturl.at/SN8eE)

Events Organized/participated in

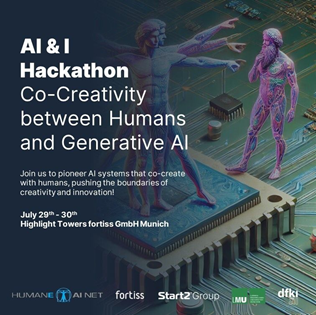

On July 29.- 30th, the “AI & I Hackathon” brought together developers, AI enthusiasts, researchers, and ambitious students for a 1.5-day deep dive into co-creativity between humans and Large Language Models (LLMs) such as OpenAI’s GPT and Google’s Gemini. Held in collaboration with fortiss, Ludwig Maximilians University, Start2 Group, and dfki, the hackathon aimed to leverage LLMs as creative collaborators rather than just passive tools, challenging participants to build systems that support human-LLM co-creation.

The two-day event was held in the fortiss office in Munich.

With 28 participants, the event welcomed a diverse international presence, with attendees from countries including the Netherlands, Sweden, Portugal, Italy, Poland, and more. Travel grants helped cover costs for those attending from outside Munich. Participants formed teams of up to four people and competed for top prizes, with 1st, 2nd, and 3rd place awards of €800, €400, and €200, respectively + cloud credits from OVH Cloud. Experts were available throughout the hackathon for consultation.

Highlights

- Collaborative Systems for Co-Creation: Teams worked to develop applications that used LLMs as interactive, idea-generating partners in content creation. Using prompts and feedback mechanisms, the projects sought to showcase how LLMs can actively contribute to generating more engaging and innovative texts.

- Inspiration Through Expert-Led Keynotes: Expert-led keynotes provided thought-provoking perspectives to set the stage. Dr. Joongi Shin (Aalto University) presented “Can LLMs Make People More Creative?” and Prof. Dr. Albrecht Schmidt (LMU) discussed “Symbiotic Creativity: The Fusion of Human Ingenuity and Machine Intelligence.” The agenda continued with Thomas Pfau (Aalto University) providing an overview of the technology stack and goals, equipping participants with the tools to start hacking.

Event Communication

To promote the event, the organizing team utilized LinkedIn channels, newsletters, and a dedicated event-website to reach a broader audience. Here are a few examples:

- Event Website: https://www.fortiss.org/en/events/ai-and-i-hackathon

- LinkedIn announcement (Start2 Group): https://www.linkedin.com/posts/start2group_aihackathon-cocreativity-llms-activity-7216097468785029120-uST0?utm_source=share&utm_medium=member_desktop

- LinkedIn announcement (fortiss):

- LinkedIn recap post (fortiss): https://www.linkedin.com/posts/fortiss_ai-and-i-hackathon-co-creativity-between-activity-7224413281833111552-mFXJ?utm_source=share&utm_medium=member_desktop

- LinkedIn Post during the Event (Albrecht Schmidt, LMU): https://www.linkedin.com/posts/albrechtschmidt_our-ai-hackathon-on-co-creativity-of-humans-activity-7223618400667766784-qOjt?utm_source=share&utm_medium=member_desktop

- Linkedin recap post from participant: https://www.linkedin.com/posts/kirti-dabas_hackathon-humancomputerinteraction-creativity-activity-7224922593173123072-vJze?utm_source=share&utm_medium=member_desktop

Hackathon Task: Developing a Prototype for Collaborative Creativity with LLMs

For this hackathon, participants were tasked with creating a prototype system designed to enhance and evaluate the collaborative creative potential between users and Large Language Models (LLMs). The objective was to build tools that facilitate seamless, innovative co-creation in text-based projects while exploring and benchmarking human-AI creativity.

Available Resources

Participants were provided with access to advanced AI models, including GPT-4, as well as various tools and endpoints for building their prototypes. They could also use a pre-designed poetry generation example to kickstart their project. Teams were encouraged to experiment with different levels of difficulty, from straightforward prompt reformulations to more complex features such as automated prompt engineering, API integrations, and even building entirely independent applications.

Final Deliverables

Each team presented a 10-minute live demo to a jury board, showcasing their prototype, followed by a Q&A. Additionally. A demo link was submitted to allow the jury to explore the prototype independently and assess its functionality and creativity-promoting capabilities.

Feedback from participants

A small survey was sent out after the Hackathon to get immediate feedback and identify areas of improvement. Even though only 8 participants took part in the survey, the results provide valuable feedback for the organizational team. The following summarizes the main findings. (Detailed survey results can be accessed through this link https://forms.gle/9Exe6RUP4njeGxCf8.)

- Overall Satisfaction: Most respondents (75%) were highly satisfied with the event’s organization and technical support.

- Positive Aspects: Attendees enjoyed the engaging tasks, inspiring keynotes, the collaborative atmosphere, and the focus on pre-built components, which allowed more time for creativity.

- Areas for Improvement: Participants highlighted the lack of feedback on project ranking and unclear judging criteria. Some felt that basic AI knowledge should have been a prerequisite, and a few noted discomfort with the provided workspace and scheduling.

- Skills Gained: Participants acquired various skills, including front-end development (React), full-stack development, prompt engineering, and multi-agent LLM-based architectures, along with teamwork and project collaboration experience.

- Additional Feedback: The participants appreciated the event organization and thanked the organizers for providing support for travel and accommodation, which enabled broader participation.

These insights reflect a well-received event with some areas for logistical and evaluative refinement.

Key Takeaways

- Future of LLMs in Collaborative Creativity: The hackathon showcased a new approach to AI, positioning LLMs as creative partners that respond to user input and inspire fresh ideas.

- Practical Applications for Co-Creative Projects: Through prompts and interactive discussions, participants explored innovative ways to engage LLMs in generating creative text in a collaborative way, driving new possibilities for human-LLM partnerships.

Conclusion

The AI and I Hackathon provided an invaluable experience for participants to explore, learn, and innovate within the rapidly evolving space of human-AI collaboration. By leveraging the expertise of the partners fortiss, LMU, and Start2 Group and dfki, this hackathon enabled developers, AI enthusiasts, and students to push the boundaries of co-creativity, setting a new standard for how LLMs can transform creative industries.

LLM4SME Workshop on July 30th 2024 in Munich

Event Overview

On July 30, 2024, fortiss hosted the workshop titled “LLM4SME: LLMs and Their Influence on Internal Business Processes” at their office in Highlight Tower, Munich. This event was organized in collaboration with Start2 Group, LMU Munich, and Bayern Innovativ, aiming to familiarize small and medium-sized enterprises (SMEs) with the potential of Large Language Models (LLMs) to optimize their business processes and improve overall efficiency. The workshop attracted representatives from SMEs looking to integrate LLM-driven innovation in areas such as customer service, content generation, and data analysis. 16 persons have been registering for the Workshop.

Event Objectives

The “LLM4SME” workshop provided insights into the rapidly evolving field of LLMs and their applications for SMEs. Participants were introduced to real-world examples and practical approaches for using LLMs to automate language-based tasks and meet the growing demands for personalized customer interactions. The event encouraged SMEs to explore how LLMs could improve their efficiency, stimulate innovation, and provide a competitive edge.

Event Communication

For promoting the event, the organization-team leveraged LinkedIn Channels, Newsletters and a dedicated event-website to attract a wider audience. In the following, there are some examples:

- Event landing page: https://www.fortiss.org/en/events/llm4sme-llms-and-their-influence-on-internal-business-processes

- LinkedIn Announcement Ai Competence Center: https://www.linkedin.com/posts/aicompetence_llm4sme-ai-largelanguagemodels-activity-7222571179482521602-ihxS?utm_source=share&utm_medium=member_desktop

- LinkedIn Announcement fortiss https://www.linkedin.com/posts/fortiss_llms-llm-kmu-activity-7200812700614983680-J8tm?utm_source=share&utm_medium=member_desktop

- LinkedIn Announcement Bayern Innovative: https://www.linkedin.com/posts/digitalisierung-bayern-innovativ-gmbh_bayerninnovativ-innovationleben-digitalisierung-activity-7207275612112171008-AOD1?utm_source=share&utm_medium=member_desktop

- LinkedIn Announcement Start2 Group: https://www.linkedin.com/posts/start2group_llms-kmus-innovation-activity-7201872388299395072-hkLw?utm_source=share&utm_medium=member_desktop

Even after the event, the communication did not end:

- Event Recap on LinkedIn from Ai Competence Center: https://www.linkedin.com/posts/aicompetence_largelanguagemodels-llm4sme-aiinnovation-activity-7227554203970236417-KxZ4?utm_source=share&utm_medium=member_desktop

- Event Recap on LinkedIn from participant: https://www.linkedin.com/posts/asaad-almutareb_ai-workflowautomation-startup-activity-7224662654152310784-tzly?utm_source=share&utm_medium=member_desktop

Highlights

- Inspiring Keynotes from Experts: Holger Pfeifer from fortiss and Dr. Sabine Wiesmüller, Director AI (Europe) at Start2, welcomed participants and set the stage for the workshop. Dr. Wiesmüller highlighted how LLMs can be leveraged to streamline tasks like data analysis and customer communication, sharing practical insights into existing AI tools that automate these processes.The workshop day concluded with an inspiring keynote by Prof. Dr. Paul Lukowicz from the German Research Center for Artificial Intelligence (DFKI), who discussed the potential of human-AI collaboration and its significance for SMEs.

- Interactive Group Workshops:

The first workshop “Process Optimization in Practice: Opportunities through LLMs for SMEs” was led by Zhiwei Han from fortiss and provided hands-on examples of how SMEs could use LLMs to enhance their processes. Participants collaborated in small groups, exchanging ideas and exploring the practical implications of implementing LLMs for optimization.

The second workshop “Effective Prompts for SMEs: Hands-On Techniques for Using LLMs” was guided by Thomas Weber from LMU. The session focussed on crafting effective prompts for LLMs, a key technique for maximizing the capabilities of these tools. Participants were encouraged to bring their laptops and actively experiment with prompt engineering techniques.

Key Outcomes

The workshop offered SMEs invaluable guidance on integrating LLMs into their operations. Interactive discussions and practical sessions provided participants with a solid foundation in LLM applications, showcasing how these AI models can drive efficiency, foster innovation, and maintain a competitive edge.

Survey: Large language Models (LLMs) for Small and Medium Enterprises (SMEs)

The study “LLMs and Their Influence on Internal Business Processes”, initiated on April 25th, 2024 and ongoing as of July 10, 2024, is a collaborative project among Ludwig-Maximilians-Universität München, fortiss GmbH, Start2 Group, and Bayern Innovativ. The survey aims to provide foundational insights for further research into AI adoption within SMEs. The survey results also informed the conceptual planning of the July 30, 2024, workshop titled “LLM4SME: LLMs and Their Influence on Internal Business Processes.” Hosted by fortiss at their Highlight Tower office in Munich, the event was organized in collaboration with Start2 Group, LMU Munich, and Bayern Innovativ (more details will be published in the Handbook, see D9.6).

The distribution of the survey was leveraged by SME Networks such as Bayern Innovativ, fortiss, KI-Hub as well as from MUK.IT and Start2 Group.

Survey Concept

This survey focuses on understanding how companies perceive and utilize AI and LLM technologies, particularly in the context of their adoption, perceived challenges, and resource requirements.

Structure and Types of Questions:

The survey includes a mix of question types:

- Multiple-choice questions – Often used for direct information gathering, such as company size, frequency of AI use, or specific departmental adoption of LLMs.

- Likert scale questions – Frequently employed to gauge attitudes, such as the importance of AI technologies to the company, perceived adoption levels, and anticipated benefits and risks across different business areas.

- Open-ended questions – These invite respondents to provide more nuanced insights on unique challenges faced, anticipated future use cases for AI, or desired areas of information to support decision-making.

Topics Covered:

The topics are organized to assess several critical areas:

- Current and Potential AI Use: This includes questions on the importance of AI to the company’s objectives, the extent of its current implementation, and specific departments where AI tools, particularly LLMs, are used or could be imagined in the future.

- Challenges and Barriers: The survey dives into the challenges companies face in adopting AI technologies, like skill shortages, high costs, data privacy concerns, technological complexity, and cultural resistance.

- Resource Needs and Plans: Respondents are asked about missing resources necessary for further AI adoption, such as specialized talent or financial support, and their plans to allocate resources (e.g., hiring, training, or outsourcing).

- Potential and Risks of LLMs: Several questions focus on evaluating LLMs’ potential benefits in areas like customer acquisition or business innovation, as well as risks such as data security or ethical concerns.

Context and Introductions:

Some questions are prefaced with short introductions, especially those involving technical terms or newer concepts (e.g., “LLMs” or “AI-driven business applications”). This approach provides clarity, helping respondents better understand the intent behind each question.

Additional Sections:

In addition to core questions, the survey concludes with consent inquiries, seeking permission to contact respondents for follow-up surveys, share results, and process personal data according to the company’s privacy policy. These elements ensure informed participation and compliance with data protection practices.

Overall, the survey is designed to capture a comprehensive view of AI integration in companies, focusing not only on current practices but also on anticipated needs, barriers, and strategic outlook. This survey template is highly adaptable and can be used across various ecosystems or geographic regions to evaluate perceptions of LLMs and AI technologies. By tailoring the questions to fit specific regional, industry, or sectoral contexts, stakeholders can gain nuanced insights into AI adoption, resource needs, and potential challenges unique to their environment. We are happy to offer consultation services to support the customization of this template, helping organizations effectively capture and analyze AI perceptions within their specific ecosystem or geographic area.

Main findings

The following presents main findings from the survey conducted among small and medium-sized enterprises (SMEs) regarding the use of large language models (LLMs) in internal business processes. A detailed analysis can be accessed here.

- Importance of AI in SMEs: Among 54 respondents, 63% of SMEs consider AI important (36%) or very important (27%), though 75% rate their adoption level as low (44%) or average (31%).

- Challenges in AI Adoption: Key challenges reported by SMEs (54 responses) include privacy concerns (11%), technology complexity (10%), and integration issues with existing systems (10%).

- Usage of LLMs in Internal Processes: Of 53 responses, 41% of SMEs use LLMs frequently (26%) or very often (15%), with the main applications in marketing (22%), IT management (19%), and customer relations (11%).

- Impact of LLMs on Efficiency: Efficiency gains from LLMs are particularly noted in Information and Technology Management, followed by marketing and CRM. 38% of SMEs (40 responses) are planning to allocate resources towards training existing staff rather than hire additional staff (16%) in order to utilize LLMs more efficiently.

- Resource Constraints for Frequent LLM Use: Privacy concerns (12%) and skilled worker shortages (12%) were the most reported limitations among 41 responses, followed closely by limited R&D resources (10%), integration challenges (10%) and poor data quality (10%).

- Perception of LLM Potential and Risks: Among 33 respondents, a significant portion views LLMs as having high business potential, though 50% express concerns about data protection and competitive pressures.

- Information Needs for Informed Decision-Making: A strong demand for information exists, especially regarding technical background, with 60% of SMEs (33 responses) seeking data protection guidance and 50% requesting practical business use cases.

Recommendations

Based on the survey results, here are targeted recommendations to support SMEs in effectively adopting and integrating LLMs into their internal processes:

- Enhance Training and Skills Development:

SMEs could benefit from dedicated training programs to improve employee skills in AI and LLMs. Given that 38% of companies plan to invest in training, tailored workshops and accessible online courses could help bridge skill gaps, making LLM technologies more approachable and enhancing internal capabilities. - Address Privacy and Data Security Concerns:

With privacy concerns cited by 11% of respondents as a key obstacle, SMEs should implement robust data protection protocols and provide guidance on regulatory compliance. Partnerships with privacy-focused AI consultancies and training on data governance can equip SMEs to safeguard sensitive information while using LLMs. - Simplify Technology Integration:

Considering that integration challenges were highlighted by 10% of respondents, SMEs would benefit from integration tools and resources to streamline the adoption of LLMs into existing workflows. Vendors could focus on providing user-friendly API interfaces and compatibility features to ease this transition. - Provide Industry-Specific Case Studies and Use Cases:

With 50% of SMEs expressing a need for practical business use cases, industry-specific examples and case studies would help SMEs visualize and plan LLM applications effectively. By observing how similar businesses leverage LLMs, SMEs can better assess potential applications and implementation strategies. - Support SMEs with Funding for R&D and Resource Allocation:

To address high costs and limited R&D resources, external support, such as grants or low-interest loans, could enable SMEs to experiment with LLMs at lower financial risk. Policymakers and industry associations could collaborate to provide such funding, fostering AI innovation within the SME sector. - Promote Ethical and Transparent AI Practices:

As LLM transparency and ethical AI practices are growing concerns, establishing clear guidelines around model transparency, accountability, and ethical considerations will help SMEs make informed choices and build trust with stakeholders. Collaborative efforts between AI providers and SMEs can focus on defining and adhering to responsible AI use.

These recommendations aim to address the specific challenges SMEs face and provide actionable steps to foster successful, scalable, and secure LLM integration.

Collaboration Outside the Consortium (in particular with AIoD etc)

The macroproject collaborated with AI on Demand platform, specifically by integrating some of the AI-Arena models into the AI-Builder (Fig. A) which provides the infrastructure and framework to execute the arena tasks (Fig. b).