Research Roadmap for European Human-Centered AI

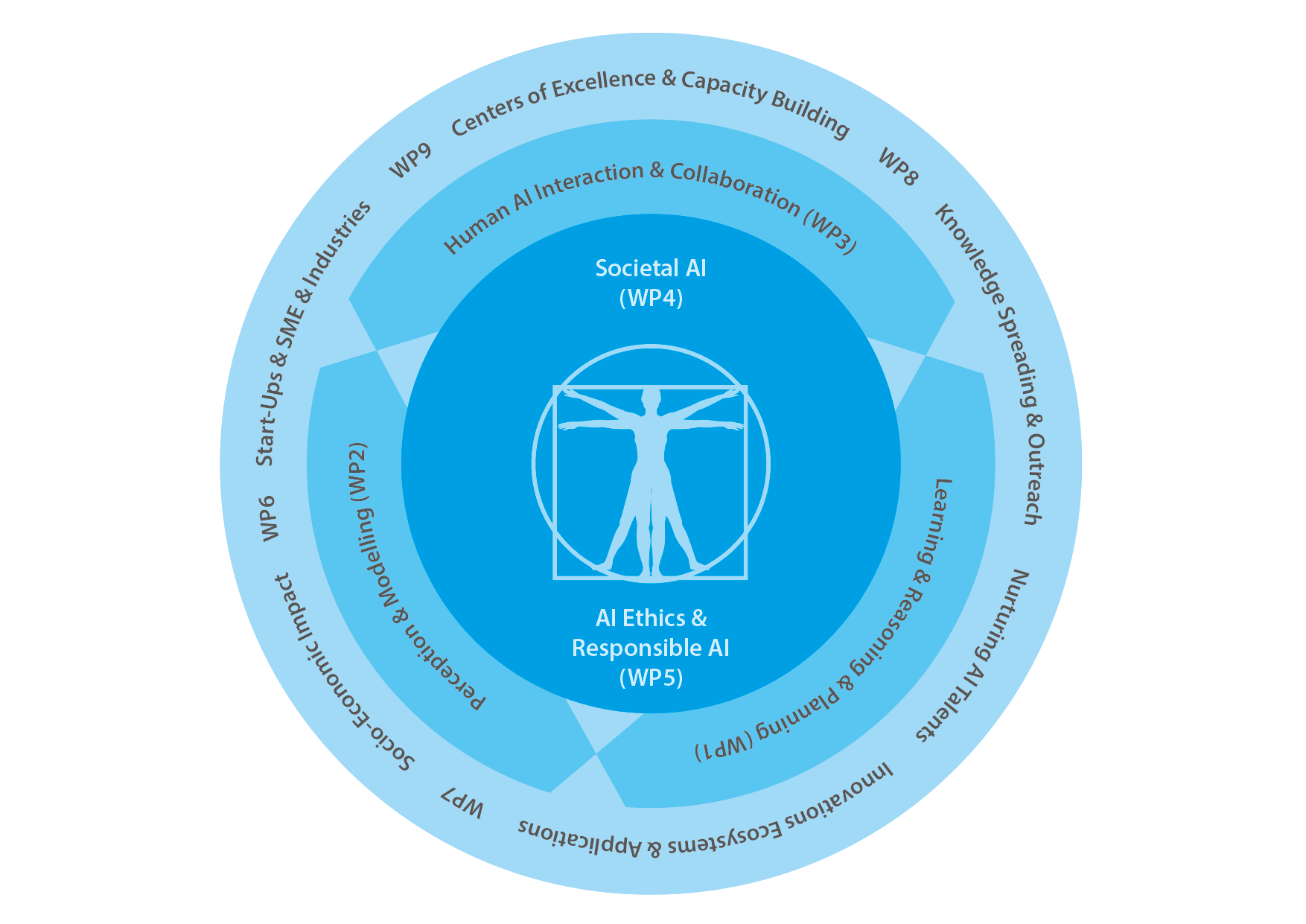

Our vision is built around ethics values and trust (Responsible AI). These are intimately interwoven with the impact of AI on society, including problems associated with complex dynamic interactions between networked AI systems, the environment, and humans.

Download our HumaneAI research agenda

Download our telecommunications industry research agenda in EN

Download our telecommunications industry research agenda in ES

Download our aviation industry research agenda

With respect to core AI topics, fundamental gaps in knowledge and technology must be addressed in three closely related areas:

-

- Learning, reasoning and planning with human in the loop,

- Multimodal perception of dynamic real-world environments and social settings

- Human-friendly collaboration and co-creation in mixed human-AI settings.

Thus, our research agenda is built on 5 pillars, as described below:

Pillar 1: Human-in-the-loop machine learning, reasoning, and planning

Allowing humans to not just understand and follow the learning, reasoning, and planning process of AI systems (being explainable and accountable), but also to seamlessly interact with it, guide it, and enrich it with uniquely human capabilities, knowledge about the world, and the specific user’s personal perspective. Specific topics include:

- Linking symbolic and sub-symbolic learning

- Learning with and about narratives

- Continuous and incremental learning in joint human-AI systems

- Compositionality and automated machine learning (Auto-ML)

- Quantifying model uncertainty

Pillar 2: Multimodal perception and modeling

Enabling AI systems to perceive and interpret complex real-world environments, human actions, and interactions situated in such environments and the related emotions, motivations, and social structures. This requires enabling AI systems to build up and maintain comprehensive models that, in their scope and level of sophistication, should strive for more human-like world understanding and include common sense knowledge that captures causality and is grounded in physical reality. Specific topics include:

- Multimodal interactive learning of models

- Multimodal perception and narrative description of actions, activities and tasks

- Multimodal perception of awareness, emotions, and attitudes

- Perception of social signals and social interaction

- Distributed collaborative perception and modeling

- Methods for overcoming the difficulty of collecting labeled training data

Pillar 3: Human-AI collaboration and interaction

Developing paradigms that allow humans and complex AI systems (including robotic systems and AI-enhanced environments) to interact and collaborate in a way that facilitates synergistic co-working, co-creation and enhancing each other’s capabilities. This includes the ability of AI systems to be capable of computational self-awareness (reflexivity) as to functionality and performance, in relation to relevant expectations and needs of their human partners, including transparent, robust adaptation to dynamic open-ended environments and situations. Overall, AI systems must above all become trustworthy partners for human users. Specific topics include:

- Foundations of human-AI interaction and collaboration

- Human-AI interaction and collaboration

- Reflexivity and adaptation in human-AI collaboration

- User models and interaction history

- Visualization interactions and guidance

- Trustworthy social and sociable interaction

Pillar 4: Societal awareness

Being able to model and understand the consequences of complex network effects in large-scale mixed communities of humans and AI systems interacting over various temporal and spatial scales. This includes the ability to balance requirements related to individual users and the common good and societal concerns. Specific topics include:

- Graybox models of society scale, networked hybrid human-AI systems

- AI systems’ individual versus collective goals

- Multimodal perception of awareness, emotions, and attitudes

- Societal impact of AI systems

- Self-organized, socially distributed information processing in AI based techno-social systems

Pillar 5: Legal and ethical bases for responsible AI

Ensuring that the design and use of AI is aligned with ethical principles and human values, taking into account cultural and societal context, while enabling human users to act ethically and respecting their autonomy and self-determination. This also implies that AI systems must be “under the Rule of Law”: their research design, operations and output should be contestable by those affected by their decisions, and a liability for those who put them on the market. Specific topics include:

- Legal Protection by Design (LPbD)

- “Ethics by design” for autonomous and collaborative, assistive AI systems

- “Ethics in design”—methods and tools for responsibly developing AI systems