Free textbook materials

Check the free online access to the eBook conference proceedings for conference members and enjoy the Human-Centered Artificial Intelligence Advanced Lectures.

About the Course

The Advanced Course on AI (ACAI) is a specialized course in Artificial Intelligence sponsored by EurAI in odd-numbered years. The theme of the 2021 ACAI School is Human-Centered AI.

The notion of “Human Centric AI” increasingly dominates the public AI debate in Europe[1]. It postulates a “European brand” of AI beneficial to humans on both individual and social level that is characterized by a focus on supporting and empowering humans as well as incorporating “by design” adherence to appropriate ethical standards and values such as privacy protection, autonomy (human in control), and non-discrimination. Stated this way (which is how it mostly appears in the political debate) it may seem more like a broad, vague wish list than a tangible scientific/technological concept. Yet, at a second glance, it turns out that it is closely connected to some of the most fundamental challenges of AI[1].

Within ACAI 2021, researchers from the HumanE-AI-Net consortium will teach courses related to the state of the art in the above areas focusing not just on narrow AI questions but emphasising issues related to the interface between AI and Human-Computer Interaction (HCI), Computational Social Science (and Complexity Science) as well as ethics and legal issues. We intend to provide the attendees with the basic knowledge needed to design, implement, operate and research the next generation of Human Centric AI systems that are focused on enhancing Human capabilities and optimally cooperating with humans on both the individual and the social level.

ACAI 2021 will have a varied format, including keynote presentations, labs/hands-on sessions, short tutorials on cutting edge topics and longer in-depth tutorials on main topics in AI.

Please check for updates!

Topics

Learning and Reasoning with Human in the Loop

Learning, reasoning, and planning are interactive processes involving close synergistic collaboration between AI system(s) and user(s) within a dynamic, possibly open-ended real-world environment. Key gaps in knowledge and technology that must be addressed toward this vision include combining symbolic-subsymbolic learning, explainability, translating a broad, vague notion of “fairness” into concrete algorithmic representations, continuous and incremental learning, compositionality of models and ways to adequately quantify and communicate model uncertainty.

Multimodal Perception

Human interaction and human collaboration depend on the ability to understand the situation and reliably assign meanings to events and actions. People infer such meanings either directly from subtle cues in behavior, emotions, and nonverbal communications or indirectly from the context and background knowledge. This requires not only the ability to sense subtle behavior, and emotional and social cues but an ability to automatically acquire and apply background knowledge to provide context. The acquisition must be automatic because such background knowledge is far too complex to be hand-coded. Research on artificial systems with such abilities requires a strong foundation for the perception of humans, human actions, and human environments. In HumanE AI Net, we will provide this foundation by building on recent advances in multimodal perception and modelling sensory, spatiotemporal, and conceptual phenomena

Representations and Modeling

Perception is the association of external stimuli to an internal model. Perception and modelling are inseparable. Human ability to correctly perceive and interpret complex situations, even when given limited and/or noisy input, is inherently linked to a deep, differentiated, understanding based on the human experience. A new generation of complex modelling approaches is needed to address this key challenge of Human Centric AI including Hybrid representations that combine symbolic, compositional approaches with statistical and latent representations. Such hybrid representations will allow the benefits of data-driven learning to be combined with knowledge representations that are more compatible with the way humans view and reason about the world around them.

Human Computer Interaction (HCI)

Beyond considering the human in the loop, the goal of human-AI is to study and develop methods for combined human-machine intelligence, where AI and humans work in cooperation and collaboration. This includes principled approaches to support the synergy of human and artificial intelligence, enabling humans to continue doing what they are good at but also be in control when making decisions. It has been proposed that AI research and development should follow three objectives: (i) to technically reflect the depth characterized by human intelligence; (ii) improve human capabilities rather than replace them; and (iii) focus on AI’s impact on humans. There has also been a call for the HCI community to play an increasing role in realizing this vision, by providing their expertise in the following: human-machine integration/teaming, UI modelling and HCI design, transference of psychological theories, enhancement of existing methods, and development of HCI design standards.

Social AI

As increasingly complex sociotechnical systems emerge, consisting of many (explicitly or implicitly) interacting people and intelligent and autonomous systems, AI acquires an important societal dimension. A key observation is that a crowd of (interacting) intelligent individuals is not necessarily an intelligent crowd. Aggregated network and societal effects and of AI and their (positive or negative) impacts on society are not sufficiently discussed in the public and not sufficiently addressed by AI research, despite the striking importance to understand and predict the aggregated outcomes of sociotechnical AI-based systems and related complex social processes, as well as how to avoid their harmful effects. Such effects are a source of a whole new set of explainability, accountability, and trustworthiness issues, even assuming that we can solve those problems for an individual machine-learning-based AI system.

Societal, Legal and Ethical Impact

Every AI system should operate within an ethical and social framework in understandable, verifiable and justifiable ways. Such systems must in any case operate within the bounds of the rule of law, incorporating fundamental rights protection into the AI infrastructure. Theory and methods are needed for the Responsible Design of AI Systems as well as to evaluate and measure the ‘maturity’ of systems in terms of compliance with legal, ethical and societal principles. This is not merely a matter of articulating legal and ethical requirements but involves robustness, and social and interactivity design. Concerning the ethical and legal design of AI systems, we will clarify the difference between legal and ethical concerns, as well as their interaction and ethical and legal scholars will work side by side to develop both legal protection by design and value-sensitive design approaches.

The 2021 ACAI School will take place on 11-14 October 2021.

We are going to use different locations all very close to each other. This allows us to keep up with the maximum occupancy restrictions:

• 3IT, Salzufer 6, Entrance: Otto-Dibelius-Strasse

• Forum Digital Technologies (FDT) // CINIQ Center: Salzufer 6 (main venue), 10587 Berlin ( Entrance Otto-Dibelius-Straße);

• Loft am Salzufer: Salzufer 13-14, 10587 Berlin

• Hörsaal HHI, Fraunhofer Institute for Telecommunications (HHI): Einsteinufer 37, 10587 Berlin (across the bridge)

There will be a possibility to participate in the School's activities online.

According to the current regulations in Germany associated with the COVID-19, we are restricted to a maximum of 60 students attending in person. The format of the event is subject to the COVID-19 regulations at the time of the School.

The program will be updated regularly. (Download the program)

Monday, 11 October |

| 09.00-09.30 |

Registration (venue: Loft) |

| 09.30-10.00 |

Welcome and Introduction (venue: Loft) |

| 10.00-12.00 |

Mythical Ethical Principles for AI and How to Operationalise Them (venue: Loft) |

| Deep Learning Methods for Multimodal Human Activity Recognition (venue: 3IT) |

| Social Artificial Intelligence (venue: FDT) |

| 12.00-13.00 |

Keynote: Yvonne Rogers (venue: Loft) |

| 13.00-14.00 |

Lunch |

| 14.00-18.00 |

Why and How Should We Explain in AI? (venue: Loft) |

| Multimodal Perception and Interaction with Transformers (venue: 3IT) |

| Social Artificial Intelligence (venue: FDT) |

| 18.00-20.00 |

Welcome Reception and Student Poster Mingle (venue: Loft) |

Tuesday, 12 October |

| 09.00-13.00 |

Ethics and AI: An Interdisciplinary Approach (venue: Hörsaal HHI) |

| Machine Learning With Neural Networks (venue: FDT) |

| Social Simulation for Policy Making (venue: 3IT) |

| 13.00-14.00 |

Lunch |

| 14.00-16.00 |

Learning Narrative Frameworks from Multimodal Inputs (venue: 3IT) |

| Interactive Robot Learning (venue: FDT) |

| Argumentation in AI (venue: Hörsaal HHI) |

| 16.00-17.00 |

Keynote: Atlas of AI: Mapping the Wider Impacts of AI by Kate Crawford |

| 17.00-18.00 |

EURAI Dissertation Award

Unsupervised machine translation by Mikel Artetxe |

Wednesday, 13 October |

| 09.00-13.00 |

Law for Computer Scientists (venue: 3IT) |

| Computational Argumentation and Cognitive AI (venue: FDT) |

| Operationalising AI Ethics: Conducting Socio-Technical Assessment (venue: Hörsaal HHI) |

| 13.00-14.00 |

Lunch |

| 14.00-18.00 |

Explainable Machine Learning for Trustworthy AI (venue: FDT) |

| Cognitive Vision: On Deep Semantics for Explainable Visuospatial Computing (venue: 3IT) |

| Operationalising AI Ethics: Conducting Socio-Technical Assessment (venue: Hörsaal HHI) |

|

|

Thursday, 14 October |

| 09.00-11.00 |

Children and the Planet - The Ethics and Metrics of "Successful" AI (venue: Loft) |

| Learning and Reasoning with Logic Tensor Networks (venue: FDT) |

| Writing Science Fiction as An Inspiration for AI Research and Ethics Dissemination (venue: 3IT) |

| 11.00-13.00 |

Introduction to intelligent UIs (venue: 3IT) |

| 11.00-14.00 |

Student mentorship meetings with lunch (venue: Loft) |

| 14.00-16.00 |

HumaneAI-net Micro-Project Presentation (venue: Loft) |

| 16.00-18.00 |

Challenges and Opportunities for Human-Centred AI: A dialogue between Yoshua Bengio and Ben Shneiderman, moderated by Virginia Dignum (venue: Loft) |

| 18.00-20.00 |

ACAI 2021 Closing Reception/Welcome HumaneAI-net (venue: Loft) |

Cognitive Vision: On Deep Semantics for Explainable Visuospatial Computing, Mehul Bhatt, Örebro University - CoDesign Lab EU; Jakob Suchan, University of Bremen

(see Tutorial Outline)

Ethics and AI: An Interdisciplinary Approach, Guido Boella, Università di Torino; Maurizio Mori, Università di Torino

(see Tutorial Outline)

Children and the Planet - The Ethics and Metrics of "Successful" AI, John Havens, IEEE; Gabrielle Aruta, Filo Sofi Arts

(see Tutorial Outline)

Mythical Ethical Principles for AI and How to Operationalise Them, Marija Slavkovik, University of Bergen

(see Tutorial Outline)

Operationalising AI Ethics: Conducting Socio-Technical Assessment, Andreas Theodorou, Umeå University & VeRAI AB; Virginia Dignum, Umeå University & VeRAI AB

(see Tutorial Outline)

Explainable Machine Learning for Trustworthy AI, Fosca Giannotti, CNR; Riccardo Guidotti, University of Pisa

(see Tutorial Outline)

Why and How Should We Explain in AI?, Stefan Buijsman, TU Delft

(see Tutorial Outline)

Interactive Robot Learning, Mohamed Chetouani, Sorbonne Université

(see Tutorial Outline)

Multimodal Perception and Interaction with Transformers, Francois Yvon, Univ Paris Saclay, James Crowley, INRIA and Grenoble Institut Polytechnique

(see Tutorial Outline)

Argumentation in AI (Argumentation 1), Bettina Fazzinga, ICAR-CNR

(see Tutorial Outline)

Computational Argumentation and Cognitive AI (Argumentation 2), Emma Dietz, Airbus Central R&T; Antonis Kakas, University of Cyprus; Loizos Michael, Open University of Cyprus

(see Tutorial Outline)

Social Simulation for Policy Making, Frank Dignum, Umeå University; Loïs Vanhée, Umeå University; Fabian Lorig, Malmö University

(see Tutorial Outline)

Social Artificial Intelligence, Dino Pedreschi, University of Pisa; Frank Dignum, Umeå University

(see Tutorial Outline)

Introduction to Intelligent User Interfaces (UIs), Albrecht Schmidt, LMU Munich; Sven Mayer, LMU Munich; Daniel Buschek, University of Bayreuth

(see Tutorial Outline)

Machine Learning With Neural Networks, James Crowley, INRIA and Grenoble Institut Polytechnique

(see Tutorial Outline)

Deep Learning Methods for Multimodal Human Activity Recognition, Paul Lukowicz, DFKI/TU Kaiserslautern

Learning and Reasoning with Logic Tensor Networks, Luciano Serafini, Fondazione Bruno Kessler

Learning Narrative Frameworks From Multi-Modal Inputs, Luc Steels, Universitat Pompeu Fabra Barcelona

(see Tutorial Outline)

Law for Computer Scientists, Mireille Hildebrandt, Vrije Universiteit Brussel; Arno De Bois, Vrije Universiteit Brussel

(see Tutorial Outline)

Writing Science Fiction as An Inspiration for AI Research and Ethics Dissemination, Carme Torras, UPC

(see Tutorial Outline)

Yoshua Bengio, MILA, Quebec

Yoshua Bengio is recognized worldwide as one of the leading experts in artificial intelligence, Yoshua Bengio is most known for his pioneering work in deep learning, earning him the 2018 A.M. Turing Award, “the Nobel Prize of Computing,” with Geoffrey Hinton and Yann LeCun. He is a Full Professor at Université de Montréal, and the Founder and Scientific Director of Mila – Quebec AI Institute. He co-directs the CIFAR Learning in Machines & Brains program as Senior Fellow and acts as Scientific Director of IVADO. In 2019, he was awarded the prestigious Killam Prize and in 2021, became the second most cited computer scientist in the world. He is a Fellow of both the Royal Society of London and Canada and Officer of the Order of Canada. Concerned about the social impact of AI and the objective that AI benefits all, he actively contributed to the Montreal Declaration for the Responsible Development of Artificial Intelligence.

Kate Crawford

Kate Crawford, Professor, is a leading international scholar of the social and political implications of artificial intelligence. Her work focuses on understanding large-scale data systems in the wider contexts of history, politics, labor, and the environment. She is a Research Professor of Communication and STS at USC Annenberg, a Senior Principal Researcher at Microsoft Research New York, and an Honorary Professor at the University of Sydney. She is the inaugural Visiting Chair for AI and Justice at the École Normale Supérieure in Paris, where she co-leads the international working group on the Foundations of Machine Learning. Over her twenty year research career, she has also produced groundbreaking creative collaborations and visual investigations. Her project Anatomy of an AI System with Vladan Joler won the Beazley Design of the Year Award, and is in the permanent collection of the Museum of Modern Art in New York and the V&A in London. Her collaboration with the artist Trevor Paglen produced Training Humans – the first major exhibition of the images used to train AI systems. Their investigative project, Excavating AI, won the Ayrton Prize from the British Society for the History of Science. Crawford's latest book, Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence (Yale University Press) has been described as “a fascinating history of data” by the New Yorker, a “timely and urgent contribution” by Science. and named one of the best books on technology in 2021 by the Financial Times.

Yvonne Rogers, UCLIC - UCL Interaction Centre

Yvonne Rogers is a Professor of Interaction Design, the director of UCLIC and a deputy head of the Computer Science department at University College London. Her research interests are in the areas of interaction design, human-computer interaction and ubiquitous computing. A central theme of her work is concerned with designing interactive technologies that augment humans. The current focus of her research is on human-data interaction and human-centered AI. Central to her work is a critical stance towards how visions, theories and frameworks shape the fields of HCI, cognitive science and Ubicomp. She has been instrumental in promulgating new theories (e.g., external cognition), alternative methodologies (e.g., in the wild studies) and far-reaching research agendas (e.g., "Being Human: HCI in 2020"). She has also published two monographs "HCI Theory: Classical, Modern and Contemporary." and "Research in the Wild." with Paul Marshall. She is a fellow of the ACM, BCS and the ACM CHI Academy.

Ben Shneiderman, University of Maryland

Ben Shneiderman is an Emeritus Distinguished University Professor in the Department of Computer Science, Founding Director (1983-2000) of the Human-Computer Interaction Laboratory, and a Member of the UM Institute for Advanced Computer Studies (UMIACS) at the University of Maryland. He is a Fellow of the AAAS, ACM, IEEE, NAI, and the Visualization Academy and a Member of the U.S. National Academy of Engineering. He has received six honorary doctorates in recognition of his pioneering contributions to human-computer interaction and information visualization. His widely-used contributions include the clickable highlighted web-links, high-precision touchscreen keyboards for mobile devices, and tagging for photos. Shneiderman’s information visualization innovations include dynamic query sliders for Spotfire, the development of treemaps for viewing hierarchical data, novel network visualizations for NodeXL, and event sequence analysis for electronic health records. Ben is the lead author of Designing the User Interface: Strategies for Effective Human-Computer Interaction (6th ed., 2016). He co-authored Readings in Information Visualization: Using Vision to Think (1999) and Analyzing Social Media Networks with NodeXL (2nd edition, 2019). His book Leonardo’s Laptop (MIT Press) won the IEEE book award for Distinguished Literary Contribution. The New ABCs of Research: Achieving Breakthrough Collaborations (Oxford, 2016) describes how research can produce higher impacts. His forthcoming book on Human-Centered AI will be published by Oxford University Press in January 2022.

Mikel Artetxe at Facebook AI Research has been selected as the winner of the EurAI Doctoral Dissertation Award 2021.

In his PhD research, Mikel Artetxe has fundamentally transformed the field of machine translation, by showing that unsupervised machine translation systems can be competitive with traditional, supervised methods. This is a game-changing finding which has already made a huge impact on the field. To solve this challenging problem of unsupervised machine translation, he has first introduced an innovative strategy for aligning word embeddings from different languages, which are then used to induce bilingual dictionaries in a fully automated way. These bilingual dictionaries are subsequently used in combination with monolingual language models, as well as denoising and back translation strategies, to end up with a full machine translation system.

The EurAI Doctoral Dissertation Award will be officially presented at ACAI 2021 on Tuesday, October 12th, at 17.00 (CET). Mikel Artetxe will also give a talk:

Title: Unsupervised machine translation

Abstract: While modern machine translation has relied on large parallel corpora, a recent line of work has managed to train machine translation systems in an unsupervised way, using monolingual corpora alone. Most existing approaches rely on either cross-lingual word embeddings or deep multilingual pre-training for initialization, and further improve this system through iterative back-translation. In this talk, I will give an overview of this area, focusing on our own work on cross-lingual word embedding mappings, and both unsupervised neural and statistical machine translation.

The number of places for on-site participation is limited. The registration is now closed.

|

Early-bird registration

(15 September) |

Late registration

(after 16 September) |

| (PhD) Student |

250€ |

300€ |

| Non-student |

400€ |

450€ |

Members of EurAI member societies are eligible for a discount (30€).

Students attending on-site will have an opportunity to apply for scholarships.

By registering, you

- commit to attend the ACAI2021 School and do the assignments (where applicable),

- commit to receiving further instructions,

- confirm having acquired approval for participation in ACAI2021 School from your supervisor (where applicable).

Please note, the registration fee does not cover accommodation or travel costs.

Please check the information on entry restrictions, testing and quarantine regulations in Germany.

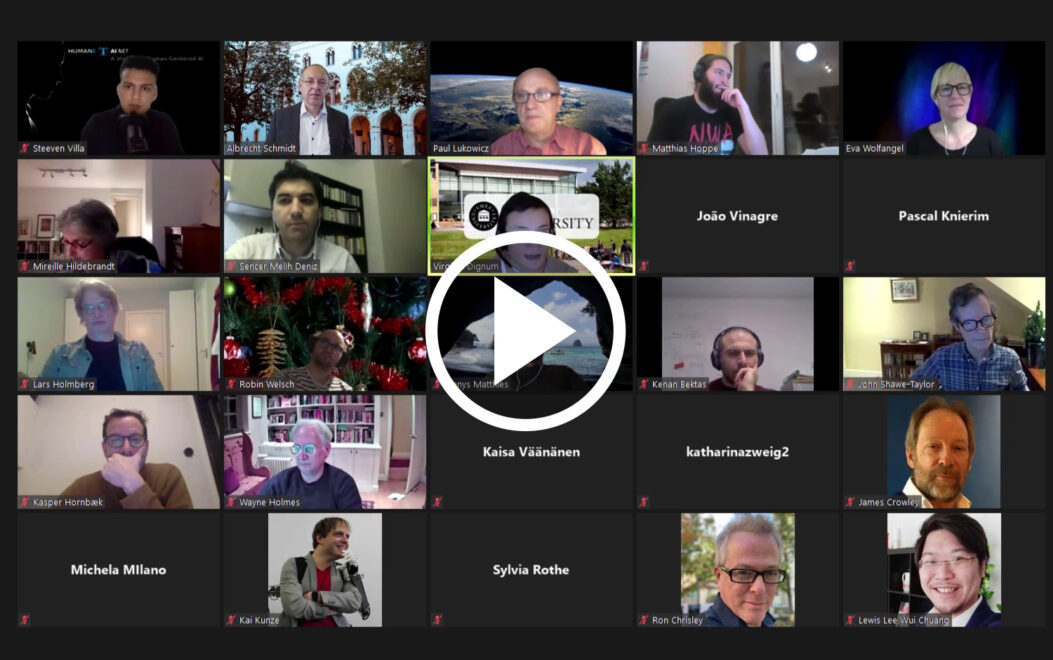

Virginia Dignum, Umeå University

ACAI 2021 General Chair

Paul Lukowicz, German Research Center for Artificial Intelligence

ACAI 2021 General Chair

Mohamed Chetouani, Sorbonne Université

ACAI 2021 Publications Chair

Davor Orlic, Knowledge 4 All Foundation

ACAI 2021 Publicity Chair

Tatyana Sarayeva, Umeå University

ACAI 2021 Organising Chair

Michael Wooldridge, Oxford University

Michael Wooldridge, Oxford University