Organizers

- Joongi Shin (Aalto University, Finland)

- Janin Koch (LISN, Université Paris-Saclay, CNRS, Inria, France)

- Andrés Lucero (Aalto University, Finland)

- Peter Dalsgaard (Aarhus University, Denmark)

- Wendy E. Mackay (LISN, Université Paris-Saclay, CNRS, Inria, France)

Event Contact

- Janin Koch (LISN, Université Paris-Saclay, CNRS, Inria, France)

Programme

| Time | Speaker | Description |

|---|---|---|

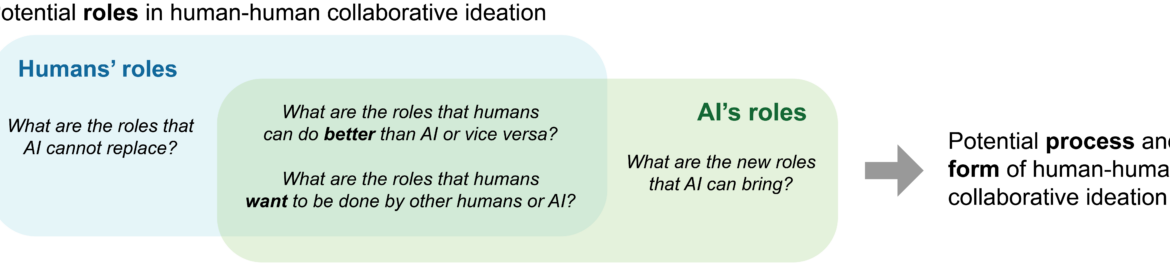

| Morning session | All participants | Co-design the roles of AI in human-human collaborative ideation |

| Afternoon session | All participants | Co-design the process and form of human-human collaborative ideation |

Background

People can generate more innovative ideas when they collaborate with one another, collectively exploring ideas and exchanging viewpoints. Advancements in artificial intelligence have opened up new opportunities in people's creative activities where individual users ideate with diverse forms of AI. For instance, AI agents and intelligent tools have been designed as ideation partners that provide inspiration, suggest ideation methods, or generate alternative ideas. However, what AI can bring to collaborative ideation among a group of users has not been fully understood. Compared to ideating with individuals, ideating with multiple users would require understanding users' social interaction, transforming individual efforts into a group effort, and—in the end—making users satisfied that they collaborated with other group members. This workshop aims to bring together a community of researchers and practitioners to explore the integration of AI in human-human collaborative ideation. The exploration will center around identifying the potential roles of AI as well as the process and form of collaborative ideation, considering what users want to do with AI or humans.